For a large web search application, our team was able to provide keyword highlighting in optical character recognition (OCR) analyzed images for a corpus of multiple million newspaper pages efficiently from a storage perspective and from a performance and scalability perspective.

The web application has a few million pages of OCR text with word coordinates stored in hOCR or ALTO XML format. We’ve indexed the text on these pages with language analysis capabilities. For instance, we’ve stemmed the words as we indexed them (a search for “garden” should return pages that contain “gardens”, “gardening”, and “gardener” as well). In our search application, when we display results we display snippets of the original text surrounding the keyword matches, with the keywords in the text snippets highlighted. Solr and Elasticsearch provide these capabilities, but with some overhead: if we wanted to retrieve highlighted snippets and word coordinates with our search results, we’d have to nearly double our storage footprint by storing a copy of our OCR data in our search index. If our corpus is large, we may not want to store this second copy; we’d like to have another means of retrieving hit highlights and snippet text. Python and NLTK can be used to provide this capability as a microservice without adding any storage overhead.

Python and NLTK provide hit highlighting capabilities that Solr and Elasticsearch provide, but without the additional storage overhead that Solr and Elasticsearch require. Below are several questions and answers addressing search engines indexing and retrieving documents, stemming, use of NLTK for generating map of word stems and word variants and use of this map for hit highlighting of word variants.

What do Solr and Elasticsearch do when they index documents? What do they do when they retrieve documents that match a search query?

Suppose we have two documents. For this example, they are very short documents.

Document1: “And time may bring various yet unforeseen considerations into play, either to simplify or to complicate the whole problem beyond present comprehension.”

Document2: “At the same time, comprehensive tests are being given in science, health, and algebra.”

We build what is called an “inverted index”, which can be thought of as a map from individual words to the document records in which they can be found, an excerpt from which might look something like this:

“comprehension”: {{Document1}},

“comprehensive”: {{Document2}},

…

“time”: {{Document1, Document2}}

Retrieving search results is akin to looking up entries in this map using the search terms as the keys. Now we see that when we search for “comprehension” we get back Document1. When we search for “comprehensive” we get back Document2. And when we search for “time”, we get back two results: Document1 and Document2.

What is stemming and how does it work? How does it change our inverted index?

Stemming is the process of reducing inflected or derived words to their root form.

If we run the words in our documents through the Snowball stemmer at index time we find that for some words we get the same root: “comprehension” and “comprehensive” both stem to “comprehens”.

Now our inverted index looks like this:

“comprehens”: {{Document1, Document2}},

…

“time”: {{Document1, Document2}}

Stemming also maps plural and singular forms to the same root. This is nice because a search for “bicycle” will also retrieve documents that contain “bicycles”.

How do we store documents vs indexing documents?

With Solr and Elasticsearch, we can index the text of documents without actually storing the text. We can build the inverted index, where the stems are the keys in the map, and they point to the record representing the document where the original word can be found. But if we don’t store the text, we have no information about what the original form of the word was or where it was located in the document. This is why, in order to enable the feature of retrieving highlighted text snippets in Solr and Elasticsearch, we have to store a copy of the full text of our documents in the search index.

We might not want to do this if we’re trying to save storage space. After all, it makes perfect sense to have the record representing the document simply contain a URI where the original document can be found, instead of including a full text copy of the document in the search index. This way we’ve only stored the document once: in the file located at the URI.

The problem this causes though is that we’ve lost the exact mapping of stem to original word. We only know that given a stem, some variant of it exists in DocumentN, but we don’t know what that variant is.

How do we reverse the stemming procedure?

If we had a list of every word (in say, the English language, supposing that our documents are in English), we could run them through the Snowball stemmer and save the results. This way we can generate a map from stems to original variants.

For example, given the stem “comprehens”, we can map this stem to a list of its inflected forms:

“comprehens”: {{“comprehensibility”, “comprehensible”, “comprehension”, “comprehensive”, “comprehensively”, “comprehensiveness”}}

Now, when a user searches “comprehension” in our search application, we can stem it to “comprehens”, retrieve the list of its inflected forms, and highlight those hits on pages that match the search query.

The following Python code illustrates how this can be done using NLTK, the natural language processing toolkit:

from collections import defaultdict

from nltk.stem.snowball import SnowballStemmer

STEMMER = SnowballStemmer(“english”)

def generate_stem_map(wordlist):

stem_map = defaultdict(list)

for word in wordlist:

stem = STEMMER.stem(word)

# we don’t have to store words that stem to themselves:

if stem == word:

continue

else:

stem_map{{stem}}.append(word)

return stem_map

Now, in our search application, when a keyword query arrives, we can use a function to “explode” the keyword into its variants:

STEM_MAP = generate_stem_map(wordlist)

def explode_search_term(search_term):

stem = STEMMER.stem(search_term)

exploded_terms = stem_map.get(stem, {{}})

# we include the original search term in the list of

# exploded terms, because we didn’t save words that

# stem to themselves in the stem map:

exploded_terms.append(search_term)

return exploded_terms

Whichever pages Solr or Elasticsearch return as matches to this query, we can simply search the page using Python to see which variants of the search query exist on the page. It’s a set intersection operation: our exploded search terms make up one set, and the words on the search result page make up the other set. Set intersections can be done in Python using the “&” operator:

matches = set(exploded_search_terms) & set(words_on_page)

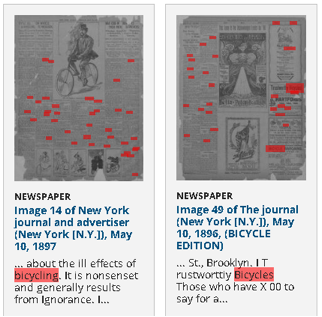

Now suppose that we have retrieved our “words_on_page” list by parsing the source hOCR or ALTO XML file on-the-fly, we also have word coordinate data for our matches, so we can display to the user an image of the page with highlights overlaid at the match locations on the page. The result ends up looking like this. The search term was “bicycle” but notice how the results include “bicycles” and “bicycling”:

In conclusion, it is not necessary to store a duplicate copy of your OCR data in your Solr or Elasticsearch index just to be able to display highlights on word variants in your search results. All it takes is some clever use of Python and NLTK.